🍁 PixelRNN & PixelCNN date 17.09.2022

Images as Sequence of Pixels by Hidir Yesiltepe

🍁 Introduction

As an attempt to explore Autoregressive Models, with this blog post we are going to distill core ideas conveyed by Pixel Recurrent Neural Network paper that was published in 2016 by DeepMind. This almost classic paper demonstrates how an autoregression can be combined with existing components of deep neural networks in applications while preserving the backbone proposal of autoregressive models.

🍁 Task

Pixel Recurrent Neural Network is a generative model for images. Modelling the joint distribution of pixels is a challenging problem in unsupervised learning for several reasons. First of all, images are a type of extremely structured data, leading individiual pixels to become highly correlated with each other. To represent this complex distribution we look for a model that is expressive, scalable but still tractable which brings us to the second obstacle.

One natural solution to modeling joint distribution is representing it via conditional probability distributions by the chain rule of probability:

$$ P(x) = P(x_1, ..., x_N) = \prod_{i=1}^N P(x_i | x_1, ... x_{i-1})\tag{1}$$

which translates seemingly unsupervised problem to supervised problem. Recall that the idea of autoregressive models is representing conditional probabilities with neural networks by ensuring:

$$ 0 \leq P(x_i | x_{< i}) \leq 1 \tag{2}$$

$$ \sum_{\mathcal{X}} P(x_i | x_{< i})=1\tag{3}$$

As far as images are concerned, Equation [1] states that in order to obtain probability of any pixel, we need to condition our model to all the previous pixels. Below you see this conditioning in action.

Apart from generating new images, density estimator models can also be used for image completion, image compression and deblurring tasks.

🍁 Contributions

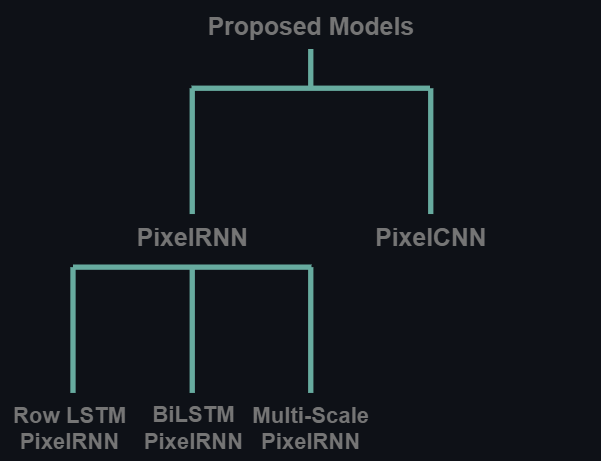

Pixel Recurrent Neural Network paper is quite reach in terms of contributions to unsupervised learning. Although it seems there is only one model being proposed by just looking at the title of the article, authors described diverse variations of the same core idea. Below you see the complete set of models brought to the literature.

PixelRNN uses a particular version of RNN architecture: LSTM RNNs. Based on how the dependencies between pixels are defined, how the receptive field of a particular pixel is structured and consequently how long range dependency is provided there are 3 main architectures proposed: Row LSTM PixelRNN, Diagonal BiLSTM PixelRNN and Multi-scale PixelRNN.

🍁 LSTM Equations

We start our discussion on PixelRNN by reviewing the LSTM Equations and then we will see how these equations can be applied using one-dimensional convolutions.